How will we write in 2030?

Reflecting on the democratization of AI writing tools and how technology is shaping our words ... for better or worse? An article that was not (yet) co-written by an AI, but with two human beings: Quentin Franque and Benoît Zante.

AI writing is growing in popularity

The news came as a bombshell. Last week, Jasper, the AI content start-up founded in 2021, announced a record $125 million fundraising round at a valuation of $1.5 billion, which makes it a unicorn, a species that has become a bit rarer these days. The funding follows strong growth for the sector as the AI writing assistant software market has been valued at $410.92 million in 2021 and is expected to reach $1,363.99 million by 2030, with a CAGR of 14.27 per cent from 2023 to 2030.

But what do we mean by "AI content" exactly? First, we had the proofreading tools. In fact, who hasn't already copied and pasted entire texts into Grammarly? Except that, until now, Grammarly, founded in 2009, corrected your text according to specific spelling and grammatical standards, but the corrector did not respect your style, your tone of voice or your usual way of speaking.

Ten years later, in 2019, Open AI (a nonprofit that has since become a “capped for profit” company) launched GPT-2, an open source AI trained on more than 1.5 billion parameters. The purpose? To automatically generate the next text sequence. A year later, GPT-3 was released. This new model is trained on an impressive 175 billion parameters. Initially in closed beta, Open AI opened the doors of GPT-3 to the public in November 2021, leading to a proliferation of startups that have been building new tools on top of its API.

Clearly, this is where AI writing as we can experience it today takes off. The idea: from a limited number of inputs or prompts (a title, a few words, a paragraph, an idea, a concept...) and by adding parameters, such as tone, number of words or language level, the AI generates an output (an article, summaries, a call-to-action for a landing page, etc.).

In France, let's take the example of Webedia (Allociné, PurePeople, 750g): a former intern, Richard Ben, recently published an article in which he documents the GPT-3 uses in the media group, in particular to paraphrase or generate summaries.

Eventually, mastering these tools will be a differentiating skill. The divide, which already exists among students who use GPT-3 to do their homework, will take shape, as talking to AIs to get what you want is not as simple as it seems... In the field of image generation, prompt artists and prompt marketplaces are emerging. Their role? Sell you images produced by AIs without making you write the initial prompt yourself.

"I tell myself that maybe in a few months, the editor who will be able to do prompts on a GPT-3 or its successors, I believe they will have quite a head start."

Grégory Florin, Head of SEO Marmiton / Doctissimo / Auféminin, quoted in the thesis "What place for artificial intelligence in the production and distribution of content?" presented this week at Sciences Po by Olivier Martinez.

A startup ecosystem is emerging

VC firm Sequoia has mapped out the generative AI ecosystem (also known as "synthetic media"), including a section devoted to AI writing tools. The American firm structures its approach around six categories, notably general writing, marketing and sales.

Digging deeper into the subject, we decided to propose an alternative version of this mapping, removing the generalist players like Wordtune or the more recent Lex, which, of great use for the time being, could however disappear in a few years if they don't evolve their models.

Indeed, in 2020, Microsoft announced that it had negotiated with OpenAI to take advantage of an exclusive licence for its GPT-3 text generation engine. The integration of GTP-3 in Word is therefore to be expected. Moreover, with DALL-E 2 (OpenAI's other AI, dedicated to image generation, which has been making a lot of noise since its launch in 2021 and its opening to all last month), Microsoft has been even faster: it has just announced its integration into Office. And it's not over yet: the software company is about to tighten its ties with OpenAI even more through a new investment that would value the startup at $20 billion.

Google Docs has evolved in the same way. Deepmind, OpenAI's main competitor, is indeed owned by ... Alphabet! And the Deepmind team has very recently documented its work on the Google Cloud suite. While Deepmind is best known to the general public for AlphaGo, which beat the world champion Go player in 2015, the startup also has its own language processing model, Gopher. It has 280 billion parameters, more than GPT-3. And it proves to be more relevant in most use cases, according to the benchmark conducted by Deepmind itself :

Scaling content production

In concrete terms, according to us, there is a first category of tools — the majority of tools on the market today — whose main objective is to scale content production and, in the long run, replace the production of low value content. For example, Byword promises to provide you with a file of thousands of SEO pages in a few hours from a simple list of keywords. Cost: $7,500 for 1,000 articles.

Is this a bonanza or a disaster? This booming category (there are more than 40 players, almost all of them launched less than two years ago!) could lead to the production of content that is grammatically correct, but without any real capacity for analysis, without any noticeable differentiation and, above all, ethically questionable. Indeed, while there was a lot of buzz around the supposed "sentience" of a Google AI this summer (i.e., its consciousness or, more precisely, its capacity to feel an emotion), the conclusion is quite clear: we are in fact very far from it! It’s an observation shared at the beginning of the month by Yann Le Cun from Meta in a research paper that made a lot of noise.

Rasa Seskauskaite, founder of the startup Anta AI, stresses this point: "When I think of Jasper, my first thought is that it is a pre-trained model, therefore it cannot produce an original thought. It contributes to even more of the same messages that are already out there, just different variations of it. More content, more clickbait, more waste of our focus." Are Google penalties coming soon?

Super-empowered Individuals

But, fortunately, we are also seeing the rise of more specialized tools that complement or facilitate work. Their purpose is to augment human intelligence and human creativity by allowing the use, depending on the case, to think better, to learn better, to communicate better etc. In short, to do better.

Have you always dreamed of writing a book with Kafka, Austen or Dostoyevsky? That's what Laika is working on. The Copenhagen-based startup uses a combination of specific proprietary templates and the good old GPT-2 to offer a library of templates based on famous writers and philosophers (editor's note: all these templates are copyright-free, of course).

The latter also proposes to train AIs on one’s own writing to create a "partner in crime" and eventually exchange "digital brains". Another similar company in this space is Novel AI.

Tired of employees who don't respect the brand's tone in their communications? The start-up Writer gives brand managers the ability to spread and optimize the use of their brand voice among employees thanks to templates that respect rules of use or precise terminologies.

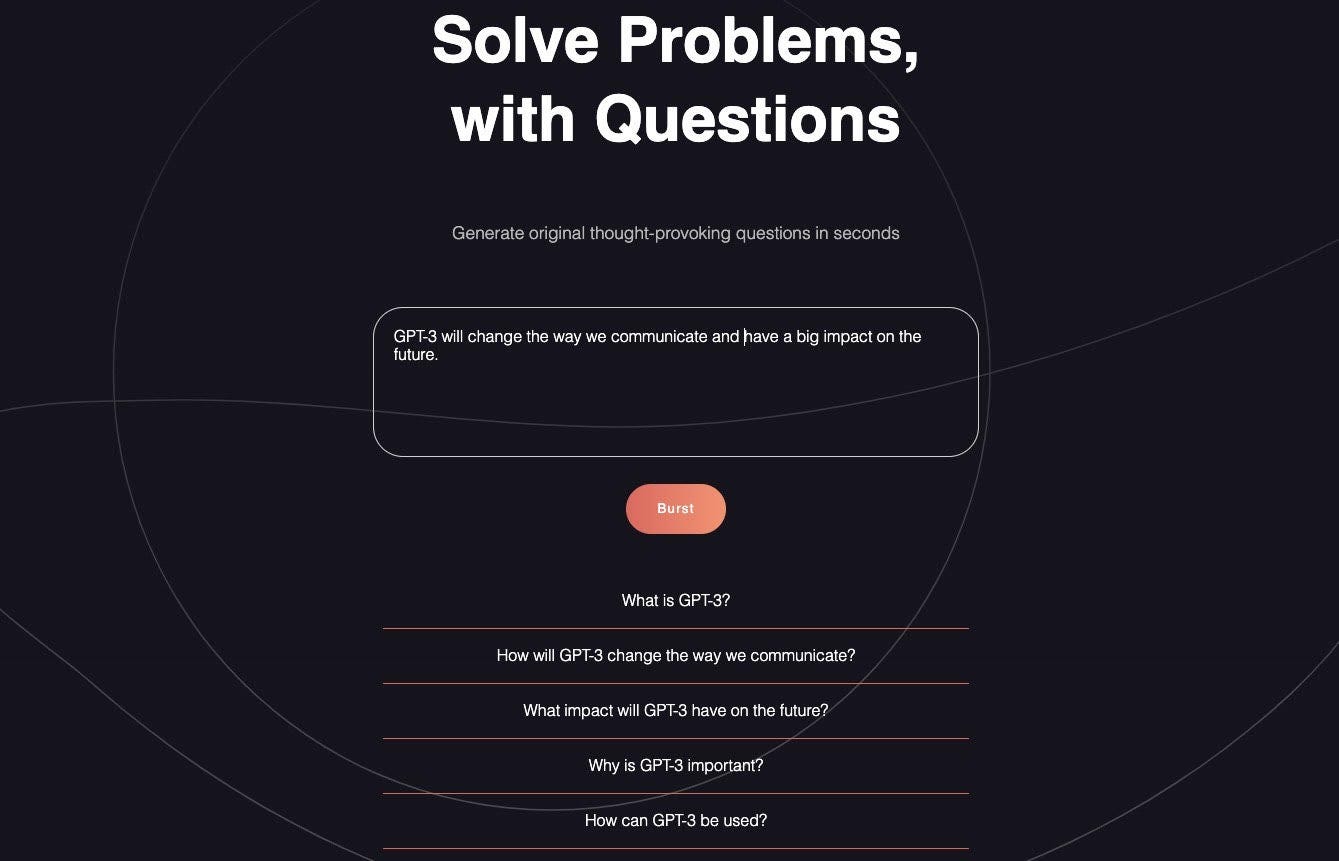

Looking for tools to boost creativity? Rasa Seskauskaite, founder of the aforementioned startup Anta AI, proposes to reverse the logic of classic AI writing tools by helping users develop their critical thinking skills. In particular, she launched QBurst, a GPT-3-based tool that helps people question everything. "We believe that questioning is the key to critical thinking and problem solving, and we are passionate about helping people learn to do this effectively. We have developed a unique approach to teaching questioning skills, and our tool allows people of all ages and backgrounds to learn to question everything they encounter in life."

Want to feel less lonely? Again, turn to AI writing. It may sound surprising, but here's what Sudowrite founder James Yu told us in an email exchange: “We hear all the time from our writers that working with an AI feels like a collaborative experience, and it's nice to know that someone (even if it's a language model) is reading, understanding, and giving feedback on their writing.”

What can we expect by 2030?

As we've seen, AI-enhanced writing has a bright future in store. Whether it is to increase the quantity, creativity or the fidelity of the production (and even the mental health of the creator), AI writing will be even more prevalent in our lives tomorrow. Maybe under the tent of the startups mentioned above, more certainly in a fully integrated and invisible way in already existing services and in the hands of the giants.

Nevertheless, as for any technology, the above-mentioned contributions cannot hide the limits that AI writing will have to face. To understand them, there is no need to travel to the year 2030. And we won't go into details, which could be the subject of another (long) newsletter, but let's note a few points:

First of all, the boom in the number of companies specializing in AI writing will quickly come up against reality. The cost of developing models and therefore their cost of use quickly becomes extremely high (Davinci, the most powerful OpenAI model costs 2 cents for 1,000 tokens, or about 750 words. Our 18,000 word article would have cost us 4 cents. Not expensive at first glance, but what if we move to a larger use?). In this respect, 2023 should be an interesting year, a kind of beginning of inflection. Without reaching profitability, the companies involved in AI writing will quickly have to prove the value of their business models.

Secondly, calls for regulation, and in particular, environmental regulation, of these models are becoming louder. The University of Copenhagen (from which the above-mentioned start-up Laïka originated) has published a conversion of the ecological cost of training GPT-3 into the number of trips to the moon. For us, this figure means nothing in itself since it takes into account too many static parameters (an AI is not trained at one time, its training cost is not the same in 2023 as in 2020...), but it gives us simple reference points.

And this brings us to our third and final limitation of these models: ethics. There are real fears around the development of such solutions. The most common objection to GTP-3, for example, is that AI trains on data from the internet, with pronounced biases on questions of racism, gender, political opinions... But fear is one thing, certainly controllable by the giants behind these technologies, and the perception of fear is another. So we come back to the following observation: the future of AI writing lies in its capacity to become indispensable without being identified.

-

Marie Dollé, Benoît Zante, Quentin Franque.

PS2. If you want to gain access to the excel file containing the details of the 80+ AI Writing start-ups, reach out!

Excellent deep-dive on this new flurry of emerging new apps and services for music creators.

Hey Marie, great deep-dive!

If you don't mind, I would like to add AI Blog Articles (getaiblogarticles.com) to the list of AI content generators, with its superior quality over the competition.