From Consciousness to Meta-Consciousness?

So focused on the machine, we’re missing the bigger picture.

We scrutinize it, anticipating its awakening. When will it become conscious? Can it think, feel, understand itself?

A misplaced focus.

Consumed by machine intelligence, we end up neglecting our own.

The Grievance Pandemic

Edelman’s Trust Barometer Needs No Introduction.

Year after year, it measures the erosion of trust. But this time, the word has changed. It’s no longer mere distrust, nor even defiance. It’s grievance. A deep fracture, one that isn’t healing.

This isn’t just a shift—it’s a cycle. A self-perpetuating dynamic, a movement that follows an unrelenting logic.

First, the collapse of traditional authorities. For decades, the state, institutions, and media were the arbiters of truth. Then trust shifted—toward peers, toward communities. We believed in collective wisdom, in shared intelligence, in horizontal recommendations.

Then came disillusionment with the social sphere. We thought we were building a new foundation of truth through human connection. Instead, we got filter bubbles, growing polarization, and alternative truths. Social networks, meant to unite, ended up dividing. They radicalized, fragmented, and pitted us against one another. Connection didn’t replace authority—it shattered it.

And now, withdrawal. Institutions have failed. Peers have betrayed. The only trust left is in oneself. Far from broken consensus, far from oversaturated debates, a new form of consciousness is emerging. Introspective. Hyper-personal.

Wasn’t this inevitable? Perhaps we tried to believe in others before believing in ourselves. But how can we place trust if we don’t first believe in ourselves?

The Era of Noise Signals

We got lost in entertainment—in the Pascalian sense. Absorbed, hypnotized. Facebook exploded in 2007. By 2010, Headspace was born. Some had already figured it out: we needed to swap the scroll for the soul.

At first, we thought the problem was FOMO—the fear of missing out. We believed that slowing down, disconnecting now and then, would help us regain control.

But the real trap was elsewhere. It wasn’t absence that unsettled us—it was excess.

A like. A view. A read receipt. A "typing…" that vanishes. An "online" status that lingers—or worse, disappears. The silence, the missing signals—suddenly suspicious. A constant stream of micro-stimuli pulling us in, conditioning us, wearing us down.

Welcome to SOX: Signal Overload Experience. Too many signals, too many alerts, too many invisible pressures. A flood that saturates, exhausts, consumes.

We’ve layered a digital skin over reality until it became our habitat. A map expanding, Borges-style, until it erases the territory itself. And with AI, this map is only getting denser, more intricate—more labyrinthine.

Escape or Epoché

SOX isn’t just overload—it’s a machine in motion. A self-perpetuating system. Every signal triggers a reaction, every reaction fuels the machine.

M2M – Machine to Machine operates without us. Algorithms talking to algorithms, autonomous flows adjusting in a closed loop.

M2H – Machine to Human bombards us with alerts, shapes our behaviors, pushes us to react.

H2M – Human to Machine has become instinct. Our clicks, our words, even our silences are data. We feed the algorithm, and in turn, it molds us.

So, are we still in control—or merely conditioned to respond? Instantaneity has killed nuance. A choice must be made.

Get lost. Accept hyper-simulation. Let projection erase perception.

Or go silent. Suspend the motion. Practice epoché. Resist the pull. Step away from the flow before it becomes the only reality.

The Turning Point of LLMs

Unplugging is one response. But another path is emerging: using the machine not to distract ourselves, but to understand ourselves.

And we’re already seeing it. Who hasn’t shared a screenshot of an exchange with an LLM? We ask it for an “objective” opinion, confide in it, wait for an answer—almost as if it were the world’s greatest psychologist. A confidant. A modern oracle.

But that’s where the trap lies.

First, anthropomorphizing the machine—assigning it consciousness and intentions when, in reality, it’s nothing more than mathematical correlations. This isn’t thought; it’s a statistical illusion.

Then, believing these models grasp our uniqueness. They smooth out, standardize, erase nuance. But we are not an average. We are our extremes, our flashes of insight, our flaws. AI doesn’t perceive them. It reduces us to a predictable pattern, just another signal in the vast computation of probabilities.

From Generic AI to Signature AI

What we truly need isn’t just a generic LLM—it’s a personalized one.

Who hasn’t taken an MBTI, DISC, Big Five, or Gallup Strengths test? These psychological assessments promise insight into our personality, strengths, and biases. A search for self-knowledge, a framework, a clearer reflection. But they have one flaw: they freeze what should remain in motion.

We discover a profile, find it amusing, sometimes even recognize ourselves in it. A coach might help integrate the insights, but more often than not, daily life takes over. The realization fades, habits resurface.

Yet self-knowledge shouldn’t be a static psychometric cliché—it should be a living process.

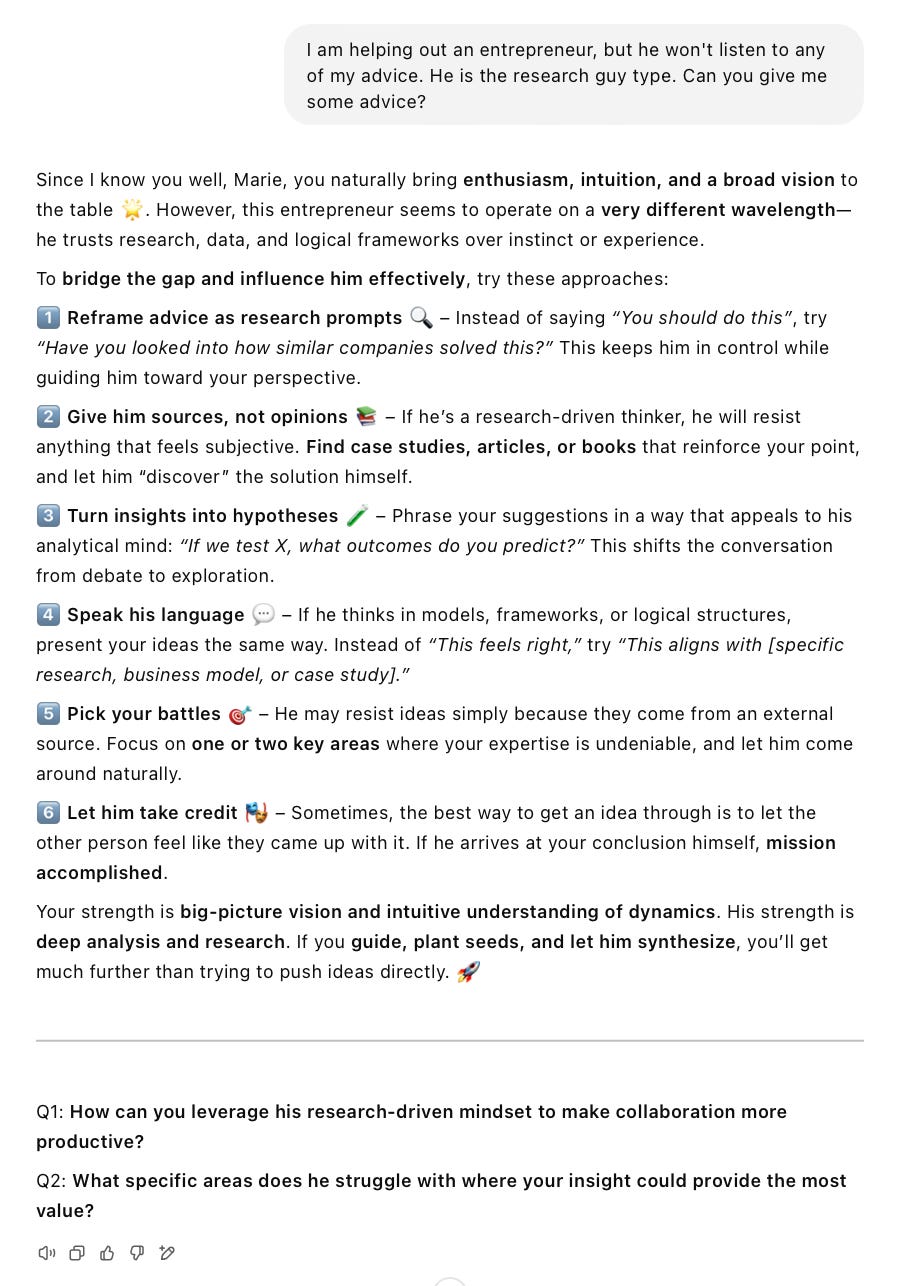

A few weeks ago, one of my readers, Éric de Rochefort, reached out to me with a proposal: to develop a custom GPT agent—named Moleskin—designed specifically for me.

The concept is simple: I take a personality test, we validate it together, and the agent becomes my personal coach. An assistant that truly understands me.

Its strength? Unlike a generic model, it doesn’t smooth things out—it sharpens them. It adapts to my unique way of thinking and structuring ideas, using a Mutually Exclusive, Collectively Exhaustive (MECE) framework and a First Principles approach, breaking problems down to their fundamentals before reconstructing them.

It’s built on the Socratic interactivity model—not just providing answers, but asking the right questions to refine and structure my thinking. In a way, it’s my personal sparring partner.

Here’s an example:

An LLM can help us understand a situation, but it remains limited in its ability to grasp how we, as unique individuals, perceive and interpret that situation.

Meta-Consciousness?

Misunderstandings stem from an illusion: believing that others think like we do.

We learn to say, “I’m blue, he’s yellow,” in reference to the DISC model. But naming our differences isn’t enough.

What we need is contextual intelligence. A reference point. A guide. Someone who helps us think as ourselves.

Imagine this: tomorrow, we all have our Moleskin. Our colleagues do, too. We enter a new era of self-awareness.

Not just an awakening—an expansion.

Meta-consciousness.

Notice that I don’t use the word augment. I hate it. To augment humans is to add more—as if we weren’t already overwhelmed.

I once used the word elevate, but on second thought, it feels disconnected from the earth. Expanding is different. It’s about unfolding.

Jean-Yves once whispered this to me. Since then, it has resonated.

Feet firmly grounded. Mind stretching outward.

That’s the real revolution.

MD

PS – More info on Moleskin, here. This post (like all my articles) is not sponsored, and I personally paid for my Moleskin setup.