A few weeks ago, DeepSeek — a large language model made in China — made headlines. Among the many reasons: it was one of the first to bring Chain of Thought prompting into the spotlight — a technique that guides AI to reason step by step, laying out its logic as if thinking out loud.

Sure, other models already had that capability. But DeepSeek put it center stage.

And yes — let’s be honest — watching a machine “think” (quotation marks fully deserved) is impressive. It feels clever. Almost human.

Cue the collective shiver: “Is this… starting to think like us?”

Well — not really.

Actually? Not at all.

Because in Chain of Thought, the operative word isn’t thought — it’s chain. And that’s exactly where the problem begins. If anything, it should have been called Thought in Chains.

A chain is a logical sequence. Ordered. Controlled. Secure. Its strength depends on its weakest link. And like any chain — it can also confine.

But humans don’t work like that.

Humans are fascinating creatures. We have this strange ability to listen without hearing. To nod in a meeting while our brain runs through the grocery list. To say “yes” to a question we didn’t understand. Fully present in body, miles away in mind.

That drift — that mental slip — is part of the process. What we call “losing the thread” is often the moment something deeper begins to take shape. Remember the monkey from The Simpsons? The one banging cymbals in Homer’s head? Not so dumb, actually. It keeps the rhythm going while something more meaningful brews underneath.

Call it a cognitive dropout.

That in-between moment — where thought floats, unanchored. In a machine, it’s a bug. Something to fix. In a human, it’s a pause. A space we honor. A silence that does its quiet work. And sometimes, it’s there — precisely there — that thought begins to emerge. Or not. Because for humans, thinking isn’t always about doing more. Sometimes it’s doing less. Sometimes it’s doing nothing. And that’s exactly how it should be.

When humans learn, it’s rarely clean. It’s not linear. We veer off course, get lost, stumble, circle back. A primitive — yet living — form of backpropagation. Not coded, but experienced.

Yes, we correct ourselves — but by going through the mess of experience, not by optimizing gradients in a parameter space, but by weaving meaning.

Our reasoning moves between two poles: Sometimes we start with facts and follow them to conclusions (deductive reasoning); other times, we begin with a hunch — a hypothesis — to make sense of what we observe (inductive or reflective reasoning). And often, we do both at once. Because humans don’t think in trees. We think in spirals.

It’s not optimized. It’s messy, uncertain, alive. It’s embodied.

Chain of thought? No. Chaos of thought.

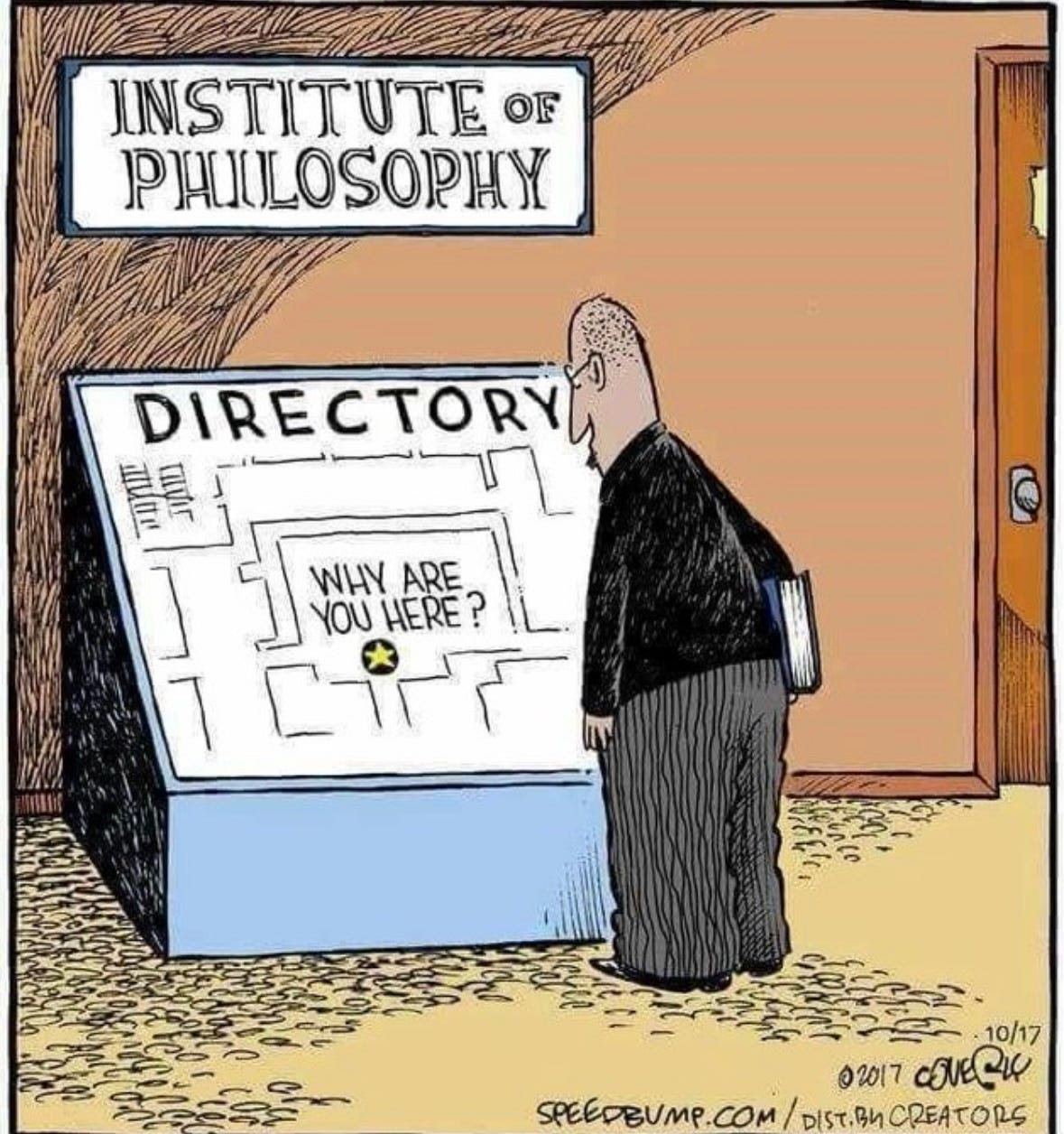

And this cartoon says it all:

The robot recalibrates. The human drifts. One backpropagates — the other digests.

Kurt Gödel saw it coming: “Either mathematics is too big for the human mind, or the human mind is more than a machine.”

Today, we call it the Gödelian Effect: those moments when calculation alone isn't enough. When we need to step outside the frame. To reimagine. To take a sideways step.

An intelligence that is embodied — not modeled.

And sometimes, that step looks like this:

You come looking for an answer — and get a question instead. And somehow, it works. You’ve landed exactly where you needed to be.

That’s what machines can’t do. They can’t point toward a void that opens. They don’t understand what it means to ask: “Why are you here?” — not in space, but in existence.

And that brings us back to the elephant in the room.

Because there are things a machine simply can’t conceptualize. Not yet. Maybe never.

Absences. Paradoxes. Loose connections. Nonsensical images that somehow make sense.

Humans feel that. We notice what’s missing. We sense when something’s off. We invent where there is nothing. We understand the incongruous, the useless, the ambiguous.

And maybe that’s where everything turns. Not in processing power — but in the ability to hack meaning. To think backwards. To ask the wrong question in just the right place.

Thinking isn’t just reasoning. It’s the act of inventing where there’s no reason to.

And for now — that’s still ours.

MD & JLA

Jean-Louis Amat has been working in AI since 1984 (PhD at IBM). His career, spanning both academia and industry, has given him a broad and hands-on perspective on the field — one he regularly shares.

Love the idea of cognitive dropout. 👌

"We have this strange ability to listen without hearing" makes me think of the shrink's free-floating attention or "gleichschwebende Aufmerksamkeit" or "l'écoute flottante". Happens to me even when I read ;) I totally forgot the rest of your text and just read the images